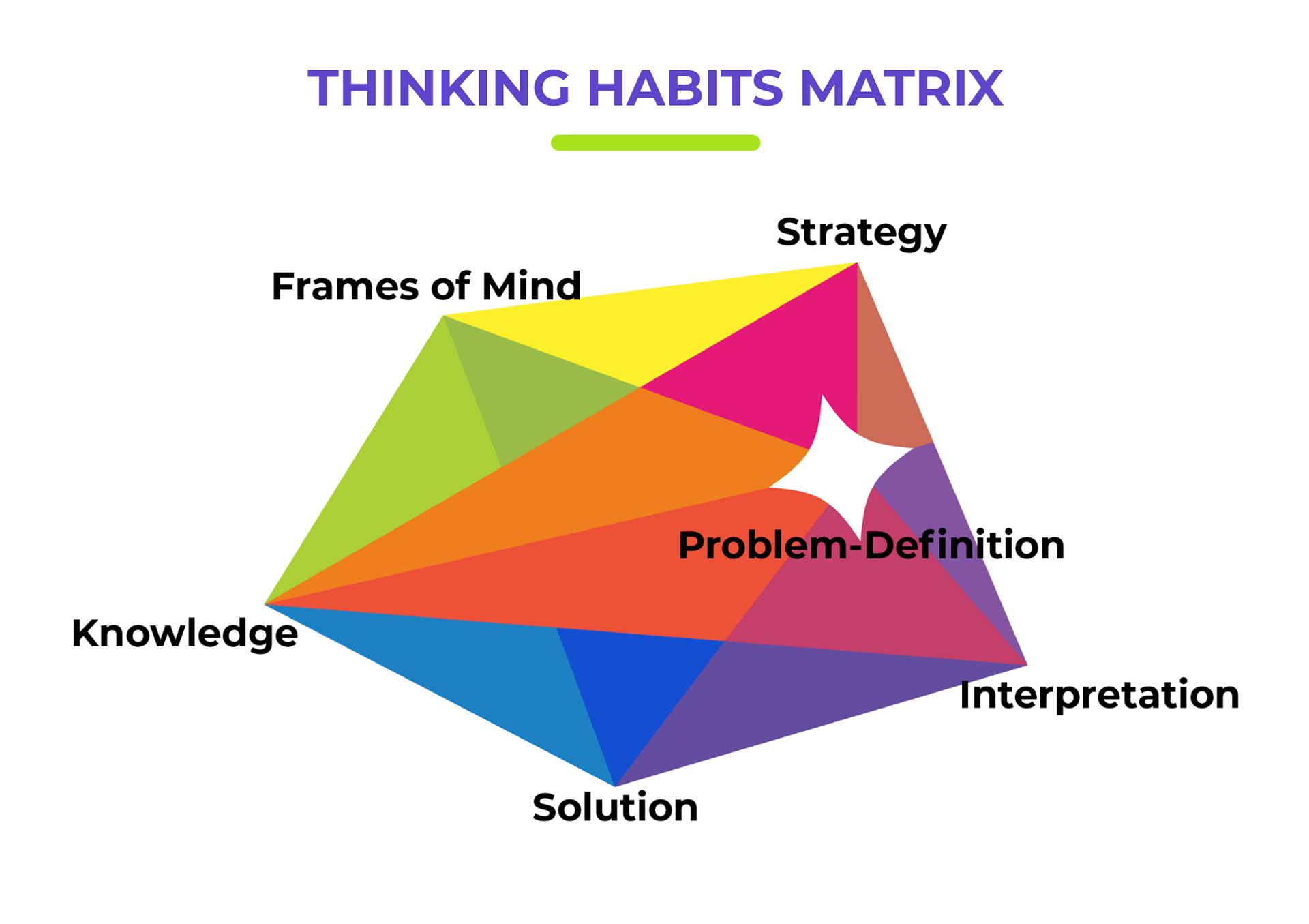

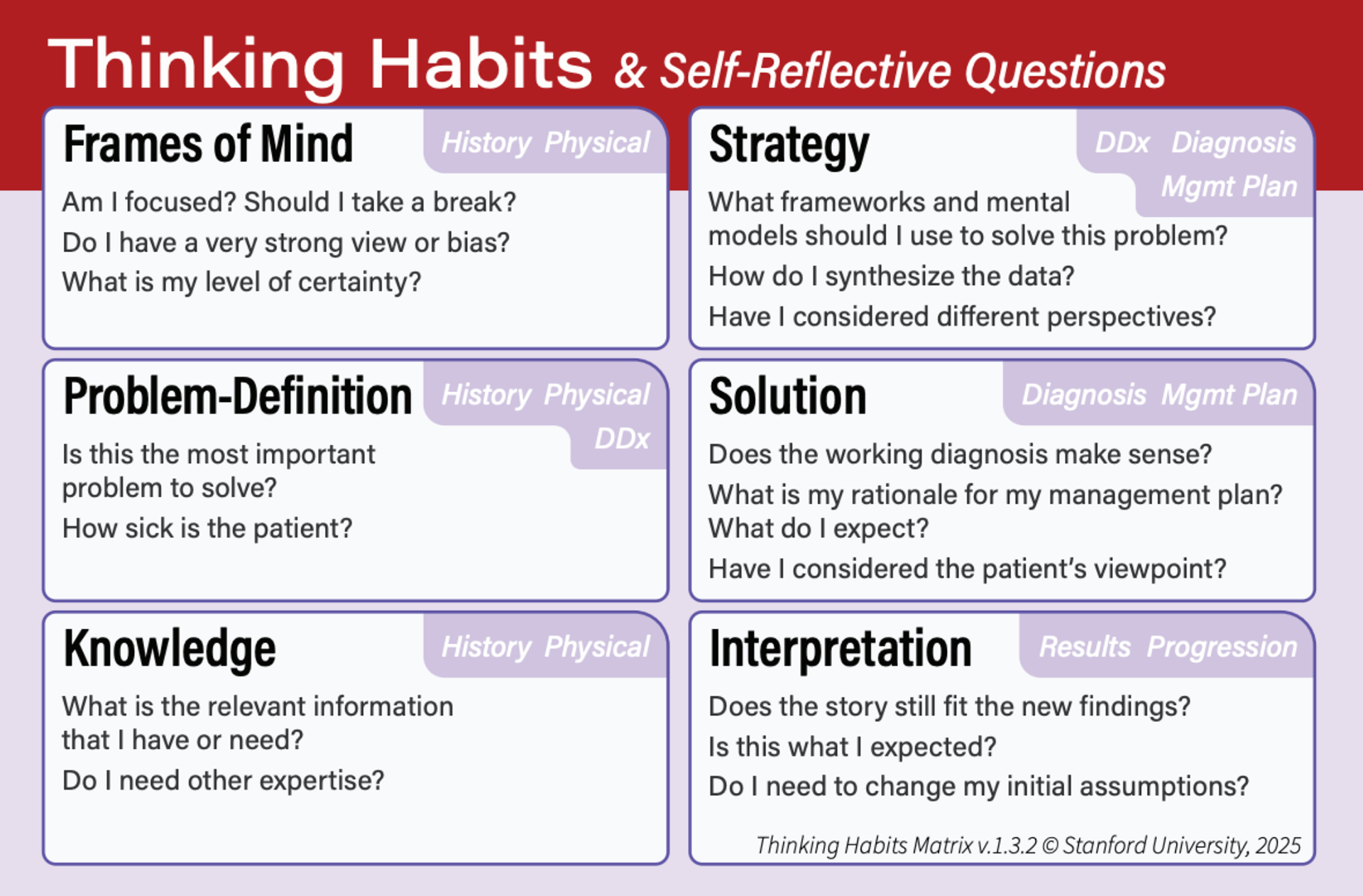

This diagram from our colleagues at the Stanford Graduate School of Education summarizes their finding that experts across dozens of STEM fields use many of the same problem-solving techniques and that all of them require self-reflection. My colleagues Shima Salehi, Marcos Rojas, Sharon Chen, and Kathleen Gutierrez have demonstrated that this model of "expert problem-solving" extends to clinical practice as well.

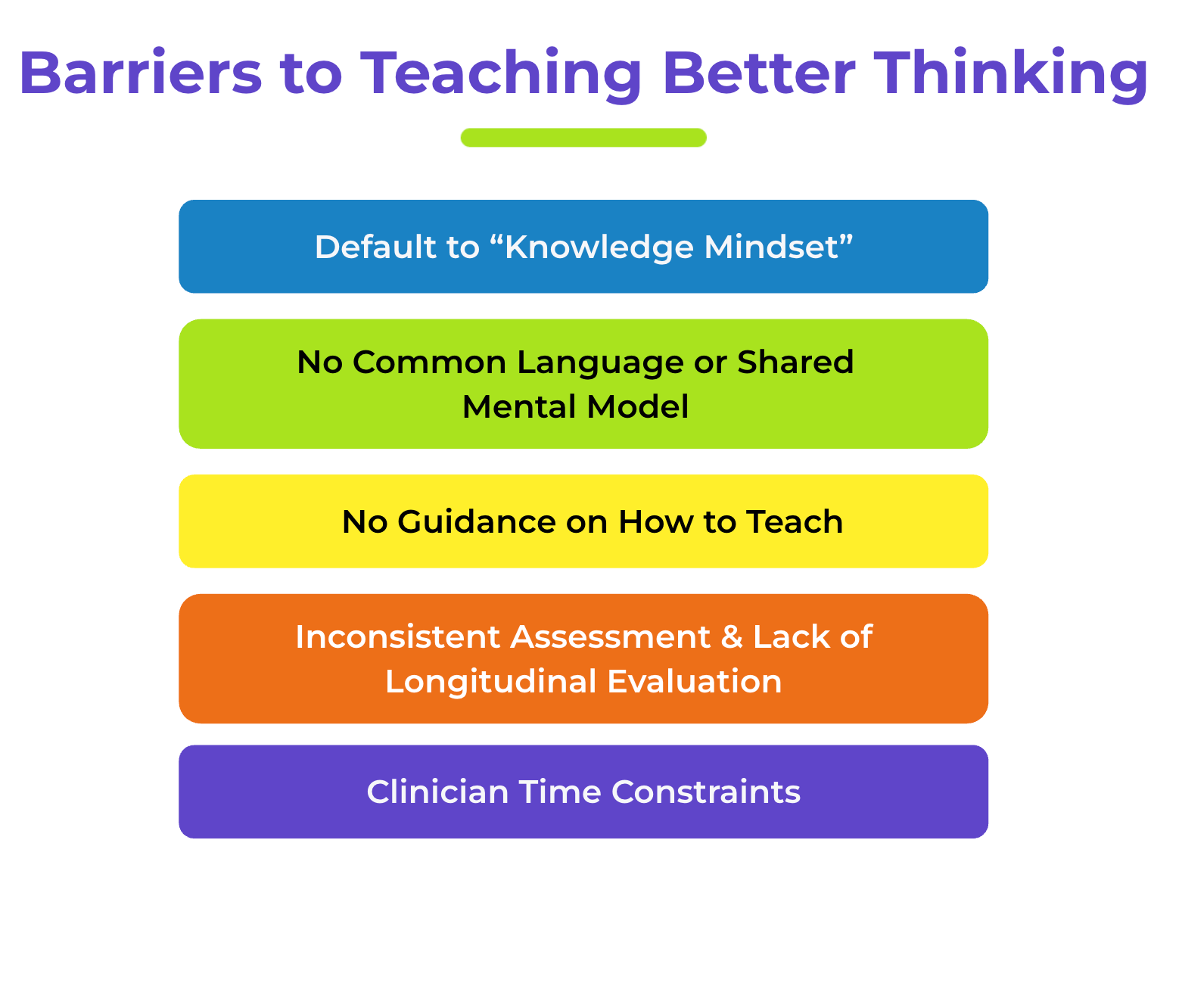

From the beginning, medical education has centered on knowledge above all else: the more you know, the better you are. There are many reasons for this — easier knowledge testing and teaching, for example — but the "knowledge mindset" of medical training is becoming obsolete. Even today, generative AI is quickly becoming the primary source of knowledge in clinical workplaces. Every physician I know uses some sort of AI tool, such as OpenEvidence, to support their decision-making.

If humans are going to remain relevant in this era, we believe we need to re-center medical education toward a "thinking mindset" culture, where the emphasis is on developing the interpretive and judgmental skills needed to apply generated knowledge.

This belief has led us to form the AI Clinical Coach team to actively research the implications of AI on thinking skills through both quantitative and product-led research, testing solutions in real clinical environments.

The AI Clinical Coach app in 2025.

The Thinking Habit Matrix

Self-reflective questions for each thinking habit

• Product design lead (UX/UI, information architecture, interaction design)

• Project vision, strategy, budgeting, and funding

• Prompt engineering and model behavior shaping

• Team formation and leadership

• Small language model (SLM) product development

Workshop activities like this helped us teach about thinking habits, while also getting feedback on the language and activities we were integrating into the app.

A user journey map I created early on that aligned our user experience with the AI workflow I designed.

AI Clinical Coach tracks learners' thinking habits over time.

Three of our core pages: the learner profile, the recording screen, and the Thinking Habits Report.

Automated refinements are coming: Our coaching questions are rateable in the app, enabling us to refine the outputs based on user feedback in our planned small language model.

Example coaching questions generated by AI Clinical Coach.

The biggest challenge we encountered was structural: every attending had difficulty simply remembering to use the application during their work, citing existing cognitive overload as the main factor. Our most frequent users adopted the strategy of using the application at the end of the service week or in the evenings as a personal coaching tool.